Building a neural network from scratch using Numpy

Watch me struggle constructing and training a SGD model on the MNIST dataset using Python and linear algebra!

Sun May 28 2023

Let's get one thing straight: neural networks are cool. It is not a rare occurrence to see college undergrads or even highschoolers experimenting with neural networks nowadays. Machine learning is exploding in popularity, and that's without even mentioning the recent trends of large learning models (LLMs) and generative AI (GANs).

Personally, the most surprising part of it is how easy it is to get started.

All you really need is to know how to code in Python and how to use a library like Tensorflow or PyTorch.

However, in this page, we will take a more traditional approach.

The Goal

My goal here is to tell my story of creating a simple perceptron from scratch that is capable of recognizing pictures of handwritten digits.

Although I like to think a comprehensive background in math is not a necessity in programming, there is no way around it for neural networks. On the plus side, it is intuitive math, and with the right abstractions, it can be simple! In fact, it is often the notation that makes it hard for a lot of people to understand the intuition behind neural networks.

Let's get started

We are going to be using Python 3 with the Numpy, matplotlib, and unittest libraries.

Numpy

Numpy will be doing the heavy work when doing operations with matrices.

Matplotlib

Every data scientist needs a good way to visualize their data. For this, matplotlib is an excellent choice as we will be able to plot the performance of our perceptron during training and testing.

Unittest

I'll admit this is not my first time attempting to make a perceptron from scratch (though it is my first successful attempt!).

Debugging the thousands of parameters of a network to 'see' whether the model is working 'fine' is the worst possible strategy one could use when validating something as complex as a neural network, especially if it's made by the person I trust the least (me) to write the code!

Unit tests make this much easier. unittest will be used for validating the predictions of the model,

as well as the training of the model.

The Plan

The standard explanation for neural networks is their imitation of the human brain. I'm not here to contest that, but it's important to look at the layers of abstraction between the mentioned explanation and what neural networks are at the lowest level.

I like to think of the neural network as just a collection of layers of neurons, and that's really it. It does no training nor predictions. It does nothing but store the parameters of the entire model.

On the other hand, a model is an abstraction on top of the network. It is what the user usually consumes. It trains the neural network and makes predictions parameterized by the network.

The plan is to start at the low level with the network, which includes operations such as construction the network (appending layers, choosing activation functions, and initiating random parameters). In between there is boring testing by asserting each appended layer has the correct shape which is compatible with the previous layer and- blah blah blah...

Then, the model will be constructed and the interface will be made to easily be consumed as if it were a Python package itself. This is an example of how I expect it to be consumed:

# Model construction model = Model() model.build([ # totally didn't copy the same pattern PyTorch uses... Linear(30, x_flat_n, c=0.2), ReLU(30), Linear(10, c=0.05), Sigmoid(10), ])

Execution

After a week's worth of work, I successfully-

Oh well this is disappointing...

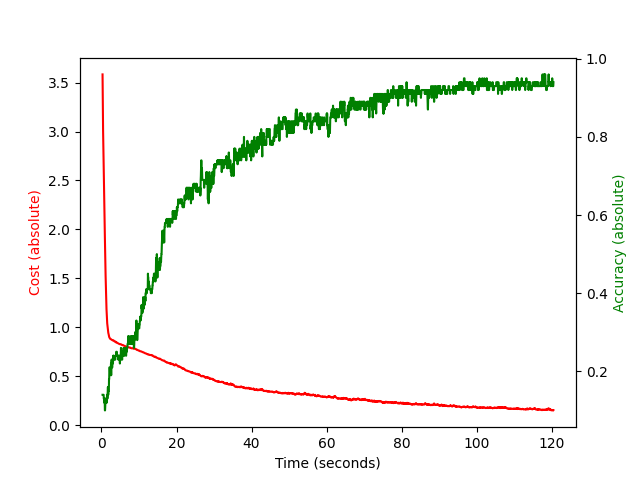

To explain what we're seeing here, this is the loss curve (red) and the accuracy curve (green). Apologies for the terrible plotting here, but accuracy is on a 0-1 scale, and as you can see, it struggles to go above 30-40%. Meanwhile the cost plateus at a cost of 0.9.

This means the model can correctly predict what digit a picture of a handwritten digit corresponds to at most 40% of the time. This is terrible!

What went wrong?

Unfortunately, it took me a couple of days of ~~suffering~~ research to figure out what was going on. It turns out it has to do with how you encode the labels of the training data.

When doing classification problems, you usually encode your labels as one-hot encoded vectors. This essentially means you turn your label, for example, 5, into a vector of 0s with the exemption of the 6th position.

0 -> [1, 0, 0, 0, 0, 0, 0, 0, 0, 0] 1 -> [0, 1, 0, 0, 0, 0, 0, 0, 0, 0] 2 -> [0, 0, 1, 0, 0, 0, 0, 0, 0, 0] 3 -> [0, 0, 0, 1, 0, 0, 0, 0, 0, 0] 4 -> [0, 0, 0, 0, 1, 0, 0, 0, 0, 0] 5 -> [0, 0, 0, 0, 0, 1, 0, 0, 0, 0] 6 -> [0, 0, 0, 0, 0, 0, 1, 0, 0, 0] . . . 9 -> [0, 0, 0, 0, 0, 0, 0, 0, 0, 1]

Originally, I was simply subtracting the label from each of the neurons in the output layer. This equates to telling the network "Your guess of 3 when the label was 4 was wrong, but it is better than if you guessed a 9." This is not how we want to penalize the network! Instead, we want to penalize the network for being wrong equally no matter what its guess was.

These are the results after the fix

Much better. Even the plot got better with the added accuracy label and accuracy y-axis!

You can clearly see the accuracy curve increasing steeply at the beginning before plateuing near 90% accuracy. Now this is only the training accuracy, but the testing accuracy was around 85%.

Results

The training accuracy after just 1 epoch reached 90%. The testing accuracy was 85%.

Here is a sample of images from the testing set and their predictions

Here we see the predictions with the top 5 highest cost, meaning the model struggled to correctly predict the digit

Then we see the same but for the predictions with the top 5 lowest cost (but still wrong prediction)

These images show the model still has some improvements to make, but in my opinion these are very impressive results using a basic perceptron and training it in just 1 epoch!

Conclusion

Overall, this was an elightening project for me. It helped ingrain the fundamentals of deep learning into my knowledge base, and I would be lying if I said this is where I stopped my journey into exploring machine learning. I have an aspiration to have my career primarily in machine learning, and this project only reinforced my ambitions.

I will continue to learn by finally exploring libraries like PyTorch and Tensorflow where I will learn advanced and modern types of neural networks and different methods for training them.

Thanks for reading!

Comments

Mon Mar 04 2024

Hi, all. It is Lysander. I am testing a new comments feature so anyone can leave feedback on my blogs. For now it is anonymous and limited to 10 comments. Hope you enjoyed the blog :)